Delete dataset files using an ETL Job

Delete dataset files using an ETL Job

To delete a dataset files using an ETL Job below parameters are required

API Url

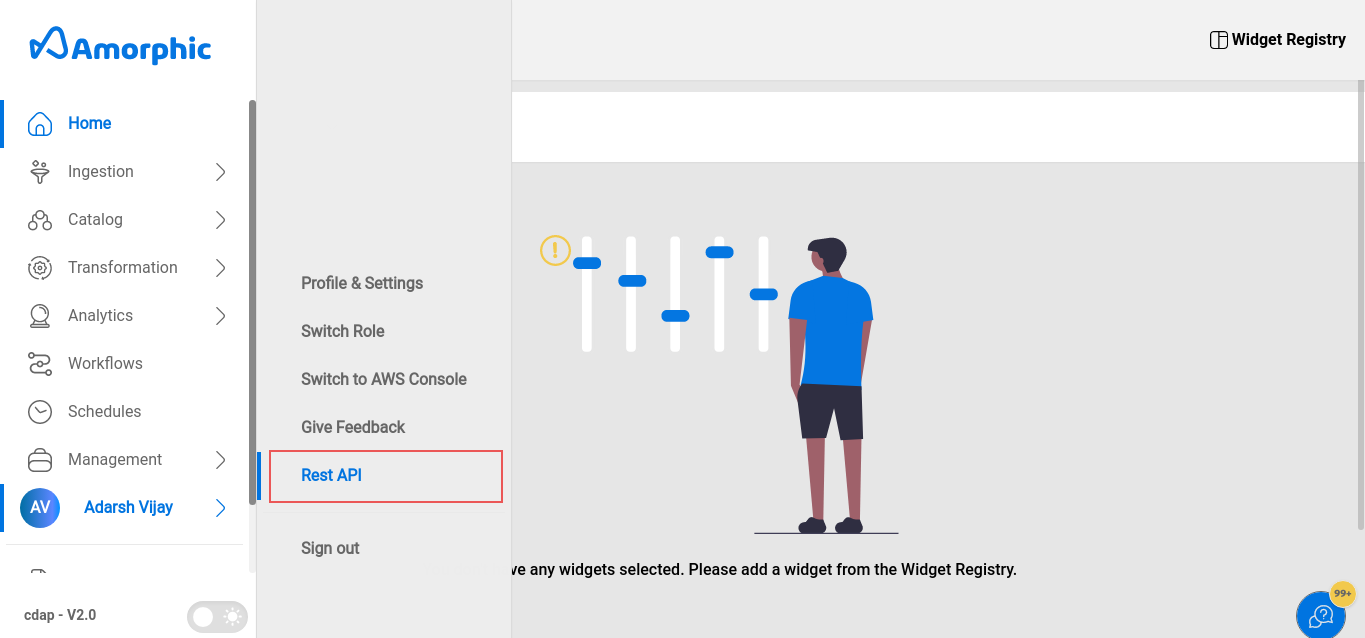

This can be retrieved from Rest API page.

Authorization Token

This token is necessary for making a call to Amorphic APIs. Follow the below image to create an Authorization Token

Role Id

This is available when a user lists the details of a role.

Dataset Id

Dataset Id of a dataset is available when a user lists a dataset details

Below is a sample piece of code, that checks whether a dataset exists and if exists deletes the first two files in the dataset

import requests

import json

# Required variables, for security purposes these values can be stored in parameters store and can be accessed

authorization_token = "some-jwt-token"

api_url = "https://somehashkey.execute-api.aws-region.amazonaws.com/develop"

role_id = "admin-role-uuid"

dataset_id = "uuid"

def dataset_files_list():

"""

This method returns the files list of a dataset

"""

dataset_files = requests.request(

url="{api_url}/datasets/{dataset_id}/files".format(

dataset_id=dataset_id,

api_url=api_url

),

method="GET",

headers={

"Content-Type": "application/json",

"Authorization": authorization_token,

"role_id": role_id

}

)

if dataset_files.status_code != 200:

print("List dataset files API call failed with status code %s", dataset_files.status_code)

raise Exception("List datasets API call failed with response %s", dataset_files.json())

list_of_files = dataset_files.json().get("files", [])

list_of_file_names = [file_item["FileName"] for file_item in list_of_files]

return list_of_file_names

#Check whether the dataset exists or not

dataset_details = requests.request(

url="{api_url}/datasets/{dataset_id}".format(

dataset_id=dataset_id,

api_url=api_url

),

method="GET",

headers={

"Content-Type": "application/json",

"Authorization": authorization_token,

"role_id": role_id

}

)

if dataset_details.status_code != 200:

print("Encountered exception for dataset details with status code %s", dataset_details.status_code)

raise Exception("Get dataset details call failed with response %s", dataset_details.json())

# List all the files in the dataset

list_of_file_names = dataset_files_list()

print("Dataset files list retrieved %s", list_of_file_names)

#Deleting first two files in the dataset

delete_files = requests.put(

url="{api_url}/datasets/{dataset_id}/files".format(

dataset_id=dataset_id,

api_url=api_url

),

headers={

"Content-Type": "application/json",

"Authorization": authorization_token,

"role_id": role_id

},

data=json.dumps({

"Operation": "permanent_delete", #Other options for operation avaialble are restore, delete

"Files": list_of_file_names[:2],

"TruncateDataset": False #Truncate dataset can only be true for permanent_delete

})

)

if delete_files.status_code != 200:

print("Delete files API call has failed with status code %s", delete_files.status_code)

print("Delete files API call failed with response payload %s", delete_files.json())

raise Exception("Delete files API call failed with response payload %s", delete_files.json())

# List all the files in the dataset

list_of_file_names = dataset_files_list()

print("Dataset files list retrieved post deletion %s", list_of_file_names)

To use the above code user needs to add requests package as a shared ETL or external library. For an application that has IP whitelisting, NAT Gateway IP has to be whitelisted to access API gateway if the job created has Network Configuration set to App-Private